Investor Summary

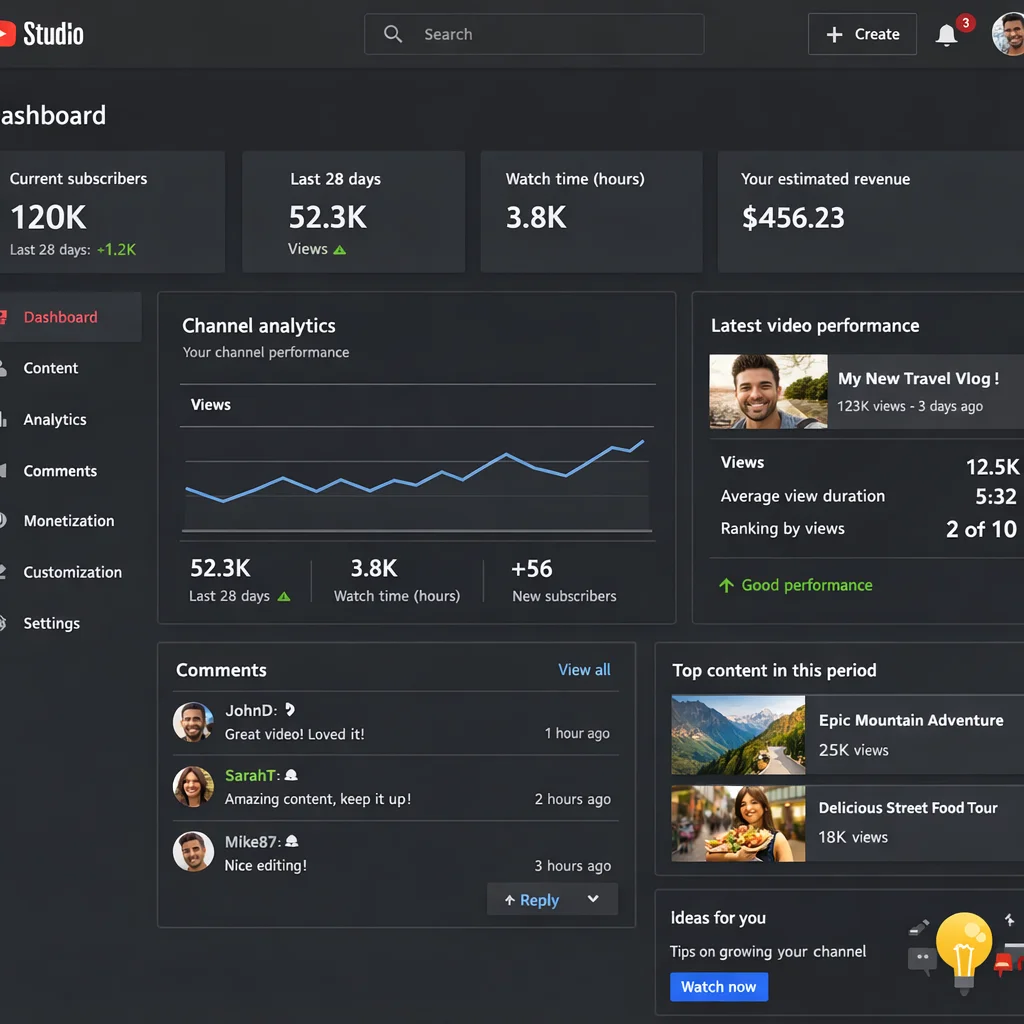

Video content demand is massive, but production is expensive and slow. This system automates the core workflow from content ingestion through scripts, visuals, narration, assembly, and upload, enabling a scalable content operation with consistent output quality at a fraction of traditional costs.

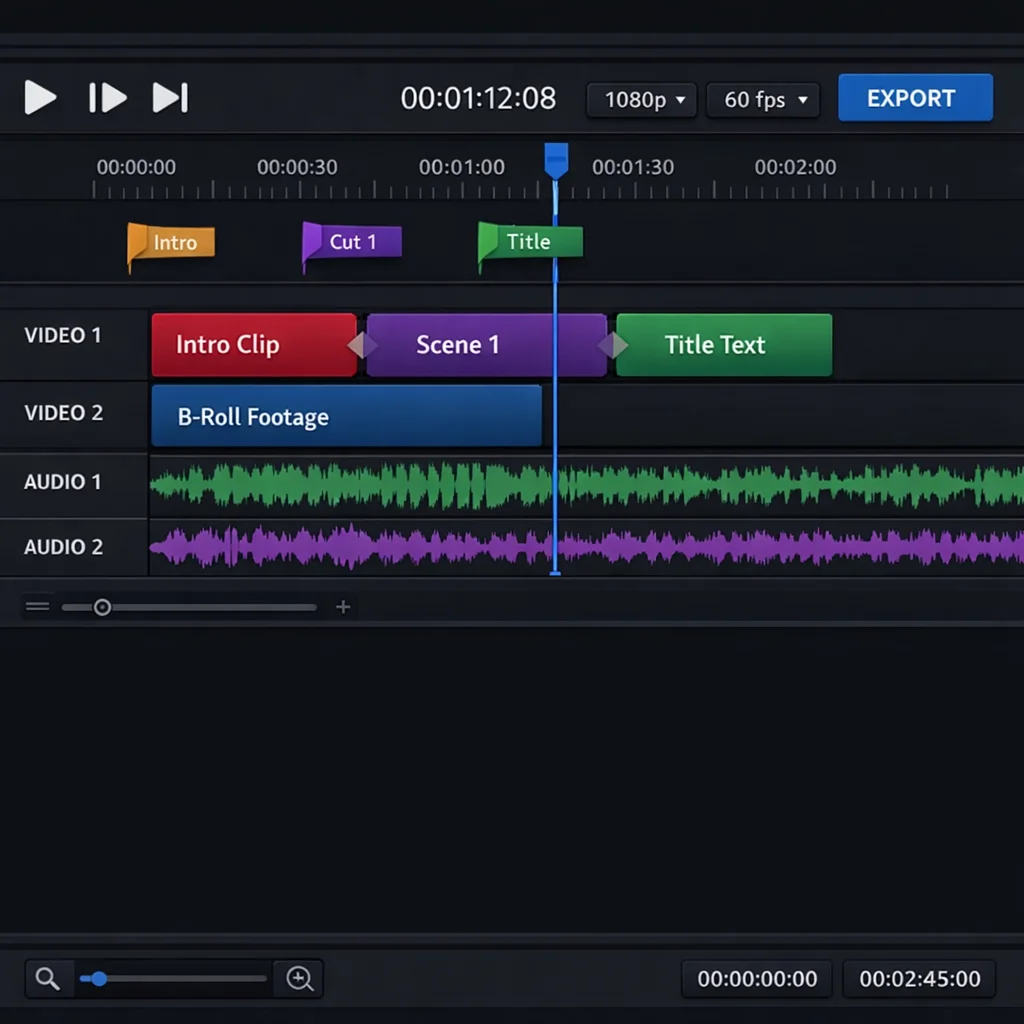

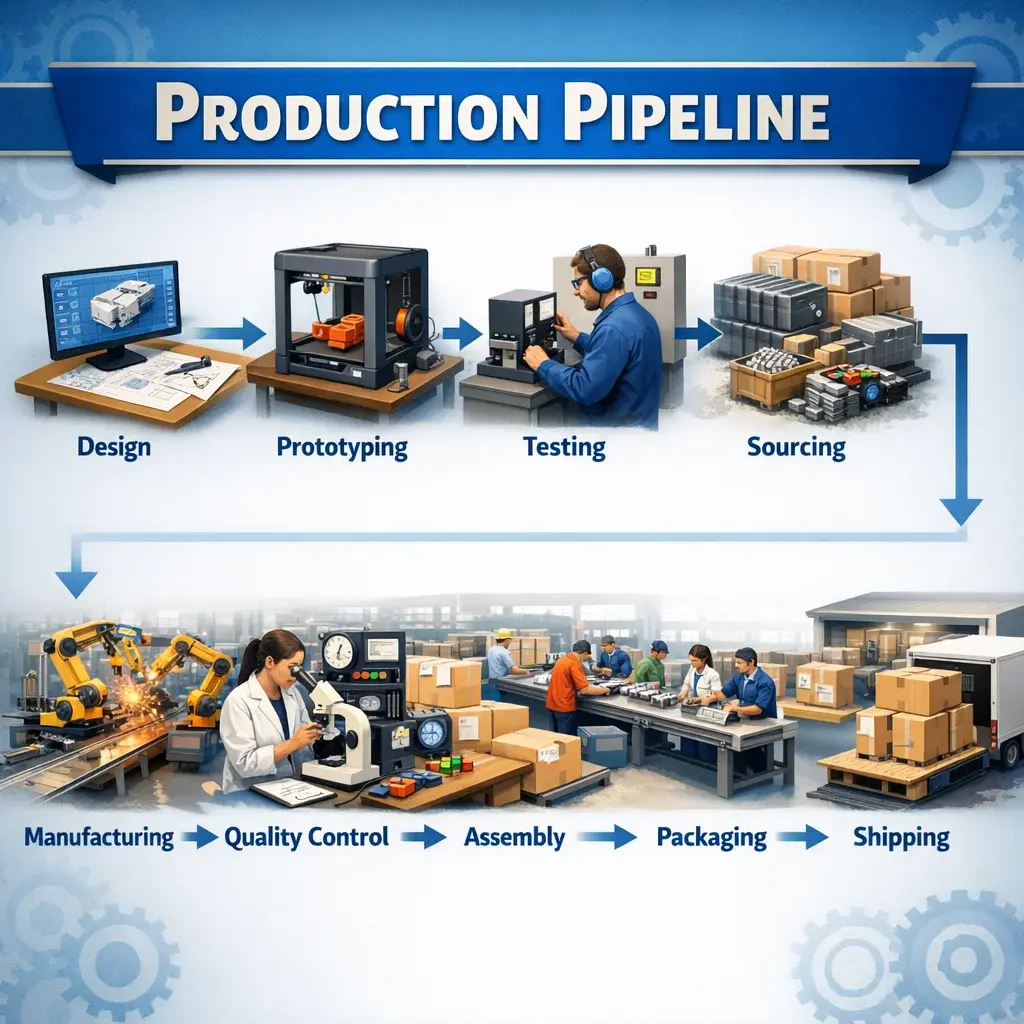

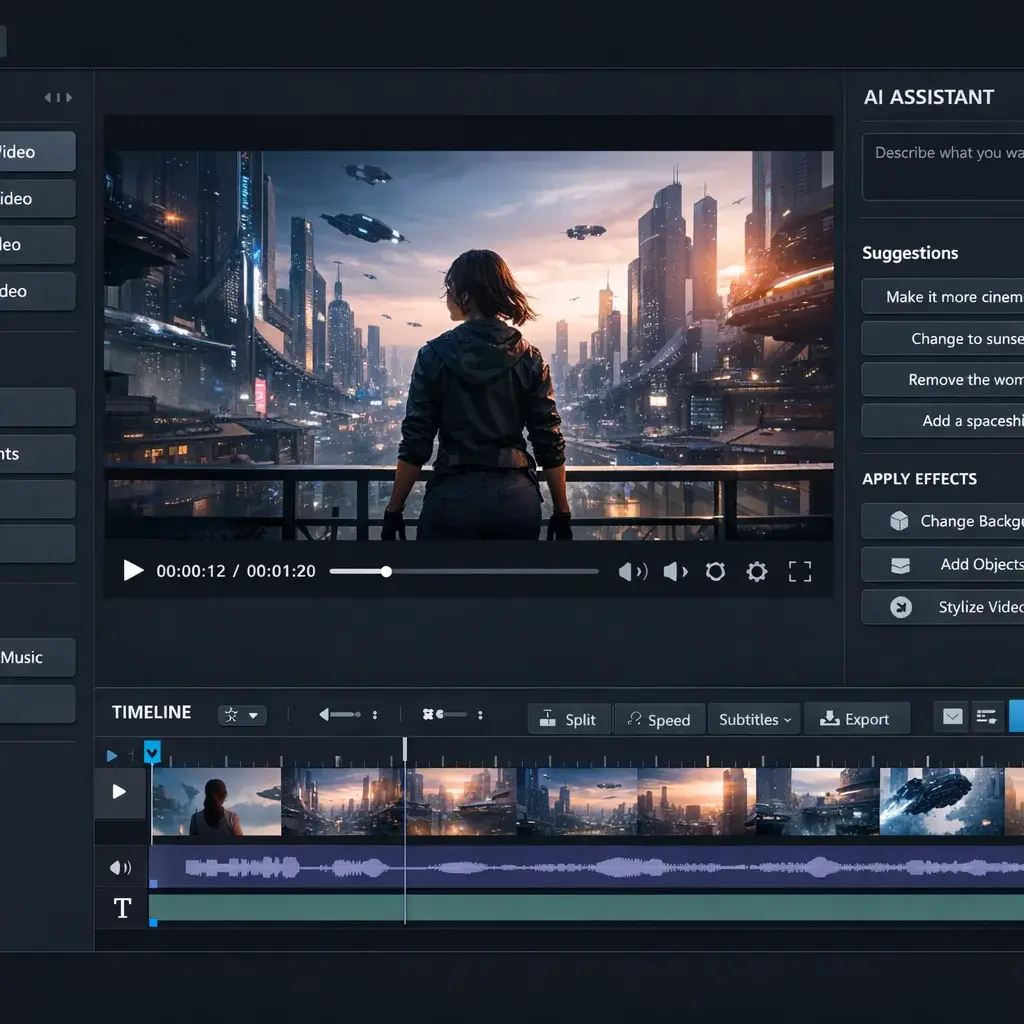

Traditional video production for a single 8-to-12-minute YouTube video requires a writer, a graphic designer, a voice actor, a video editor, and a project manager. That team produces one video per week at a cost of $1,500 to $5,000 per finished asset. Our pipeline replaces the entire human chain with an orchestrated sequence of AI services: Firecrawl extracts and structures the source content, LLMs transform it into a narration-ready script with storyboard annotations, fal.ai generates scene-matched imagery, ElevenLabs synthesizes studio-grade voiceover, and the assembly engine composites everything into a timeline-accurate render with transitions, overlays, and background audio. The finished asset uploads to YouTube through OAuth with AI-optimized metadata. Total elapsed time: minutes, not days. Per-unit cost: single-digit dollars, not thousands.

The architecture is built for horizontal scale. Each pipeline stage is a discrete module communicating through a structured event bus, meaning the system can process dozens of videos concurrently without contention. Provider-level rate limiting, retry logic with exponential backoff, and automatic failover between alternative services ensure that transient API outages do not halt production. Cost accounting is tracked per video, giving operators granular unit economics across every AI provider invocation. This is infrastructure for running a content operation as a business, not a creative exercise.