Investor Summary

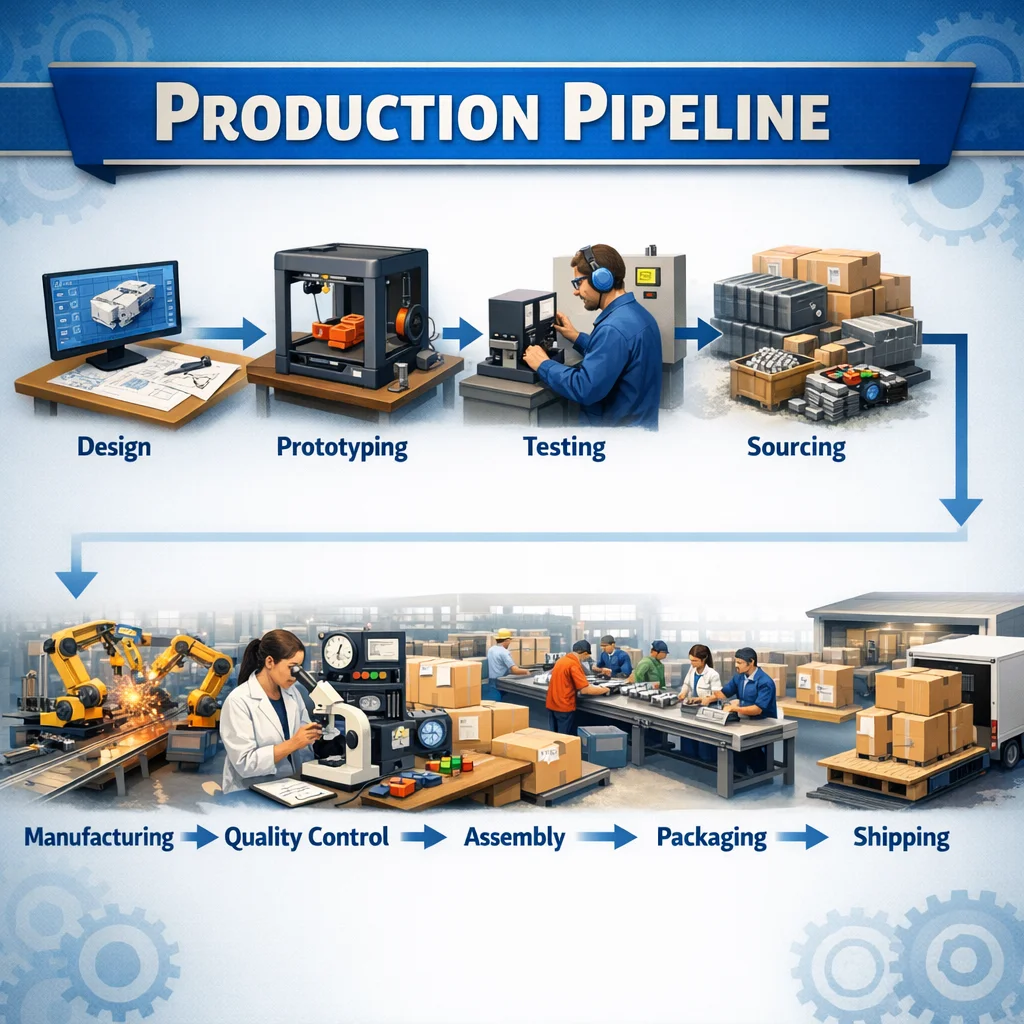

Video content demand is massive, but production is expensive and slow. This system automates the core workflow from content ingestion through scripts, visuals, narration, assembly, and upload — enabling a scalable content operation with consistent output quality.

The creator economy has exploded to $12B, yet most creators face the same bottleneck: producing quality video content is time-consuming and costly. Our pipeline transforms a single URL into a fully-rendered, narrated video ready for upload — in minutes, not days. Traditional production requires a team of writers, designers, voice artists, and editors. Our system replaces that entire workflow with an orchestrated chain of best-in-class AI services.

The platform is designed for horizontal scalability. Each pipeline stage operates independently, allowing operators to run multiple concurrent video productions across different content niches. This architecture transforms video production from a labor-intensive creative process into a scalable, predictable content operation with measurable unit economics.