Investor Summary

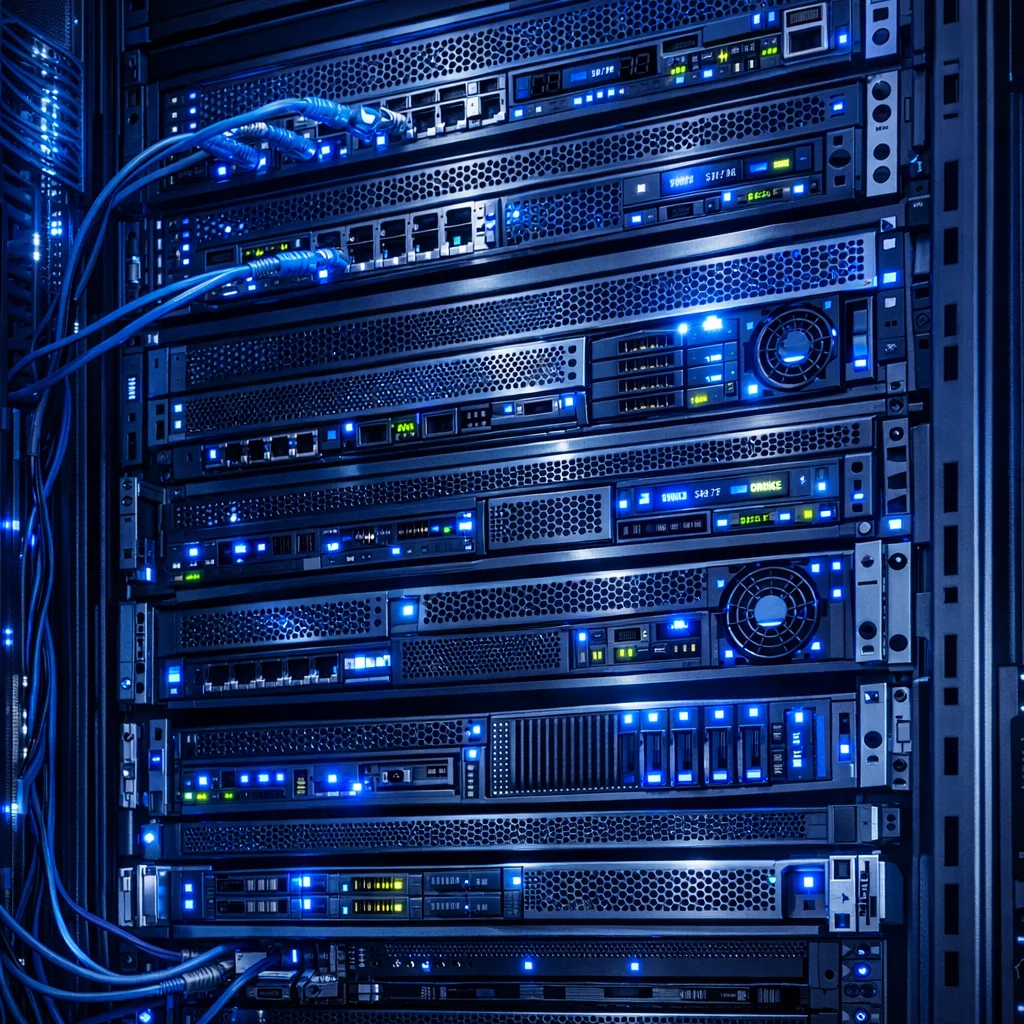

This platform is the operational backbone for the entire company. It delivers a multi-service, AI-ready environment with automated secret management, full observability, and disaster recovery — enabling rapid experimentation and production-grade deployment without recurring public cloud costs.

While competitors burn through six-figure annual cloud budgets, our infrastructure runs AI workloads at near-zero marginal cost. The platform hosts 50+ containerized services including model inference, vector databases, and real-time analytics — all with enterprise-grade monitoring and automated failover. The hardware investment pays for itself within the first year compared to equivalent AWS or Azure deployments.

The architecture is designed around a dual-server topology connected over a 2.5Gb private network, with ZFS providing enterprise-grade data integrity through checksumming, copy-on-write snapshots, and automatic self-healing. This foundation ensures that AI workloads run on infrastructure that meets the same reliability standards as Fortune 500 data centers.