How It Works

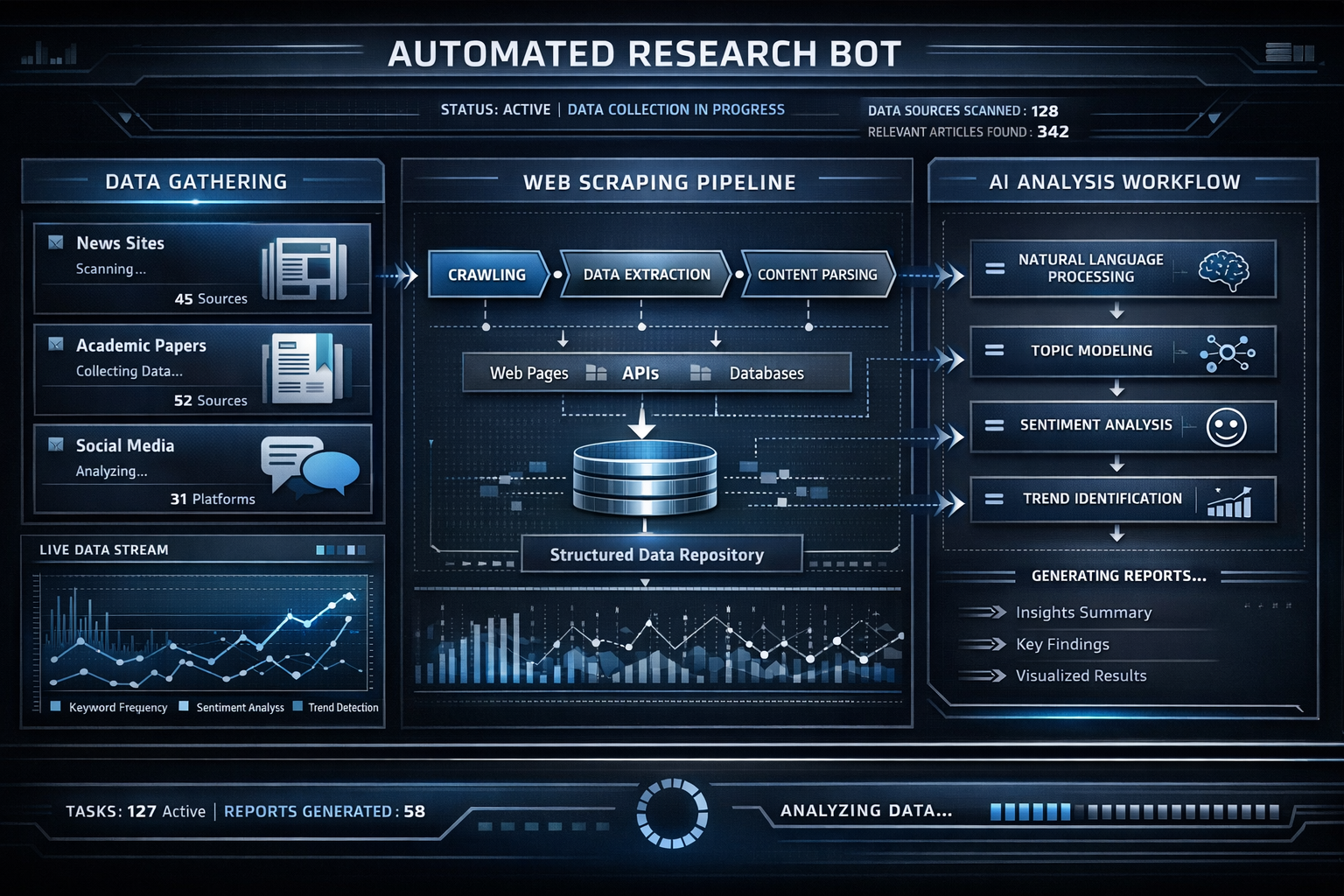

The pipeline begins with multi-source ingestion. Custom scrapers monitor Reddit subreddits like r/SideProject and r/Entrepreneur, parse Indie Hackers discussion threads, and execute targeted Google dorking queries designed to surface early-stage business signals that traditional market research tools miss entirely.

Raw text from each source passes through a preprocessing stage that normalizes formatting, extracts key entities, and removes noise. The cleaned content is then forwarded to our local Qwen 30B language model running on dedicated GPU hardware for structured analysis.

The LLM evaluates each opportunity against a consistent rubric covering market size estimation, competitive density, technical complexity, monetization potential, and time-to-revenue. Scored opportunities are embedded as dense vectors and stored in ChromaDB alongside rich metadata, enabling both semantic similarity search and structured filtering.