Voice Energy — Your Tone Tells a Story

With explicit, revocable consent, AuraLLM captures a brief voice sample and extracts derived features entirely on-device. Raw audio is processed in-memory and immediately discarded — only numeric scores persist: Speaking Rate (words-per-minute cadence), Tone Warmth (emotional warmth 0-100), and Vocal Energy (estimated energy 0-100). Future TFLite prosody model integration will deepen analysis without changing the privacy guarantees.

Habit Tracking — Small Actions, Big Impact

Log the habits that shape your energy every day across six categories: Meal, Sleep, Exercise, Hydration, Meditation, and Social. Each entry is rated 1-5 for quality. A rolling 3-day average drives the Habit Bonus: excellent habits (4-5) add +10 to +15 points to Others Impact, good habits (3-4) add +5 to +10, building habits (1-3) add +0 to +5. Consistent positive actions compound visibly over days.

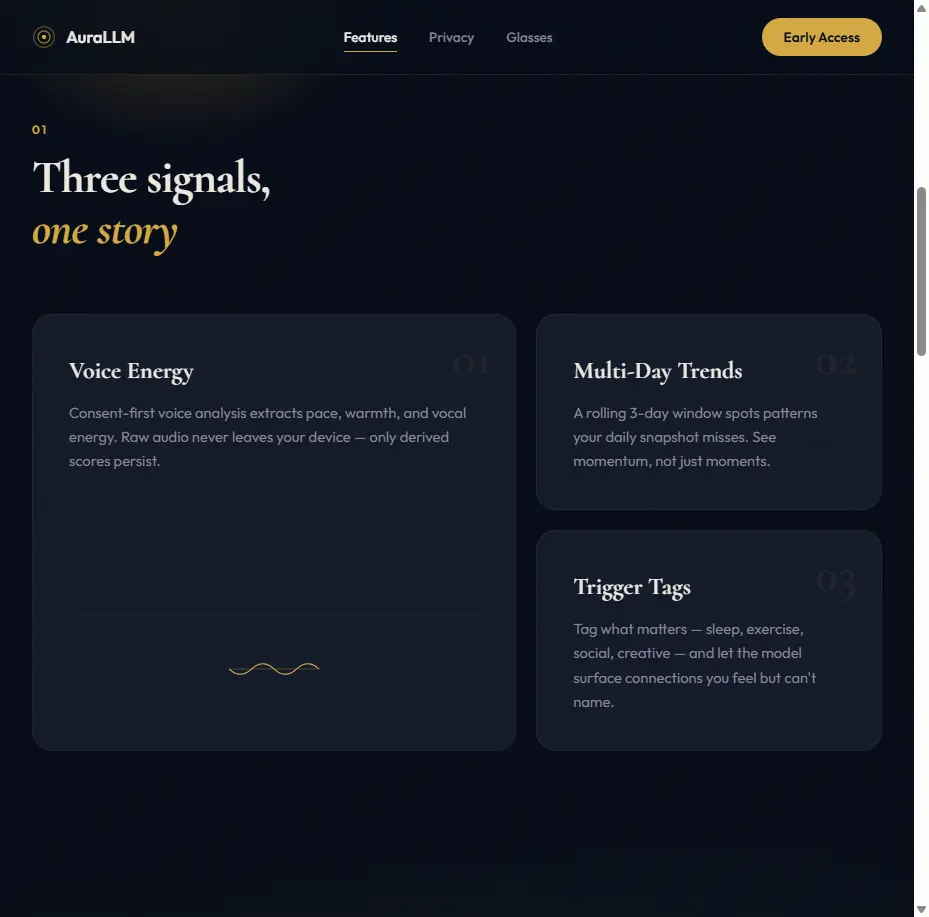

Trigger Tags — Name What Moves You

Tag what's influencing your energy today and let the model surface connections you feel but can't name. Six tag categories — Sleep, Exercise, Social, Work, Nature, and Creative — capture the dimensions of daily life that shape your energy portrait. The LLM weaves these triggers into personalized reflective narratives, revealing patterns across days and weeks that manual journaling would miss.